It should be easy to perform A/B experiments with live models within the MLOps framework. A/B testing: no matter how solid cross-validation we think we’re doing, we never know how the model will perform until it actually gets deployed.As we learn the truth, however, we need to inform the model to report on how well it is actually doing.

Why? Typically we run predictions on new samples where we do not yet know the ground truth.

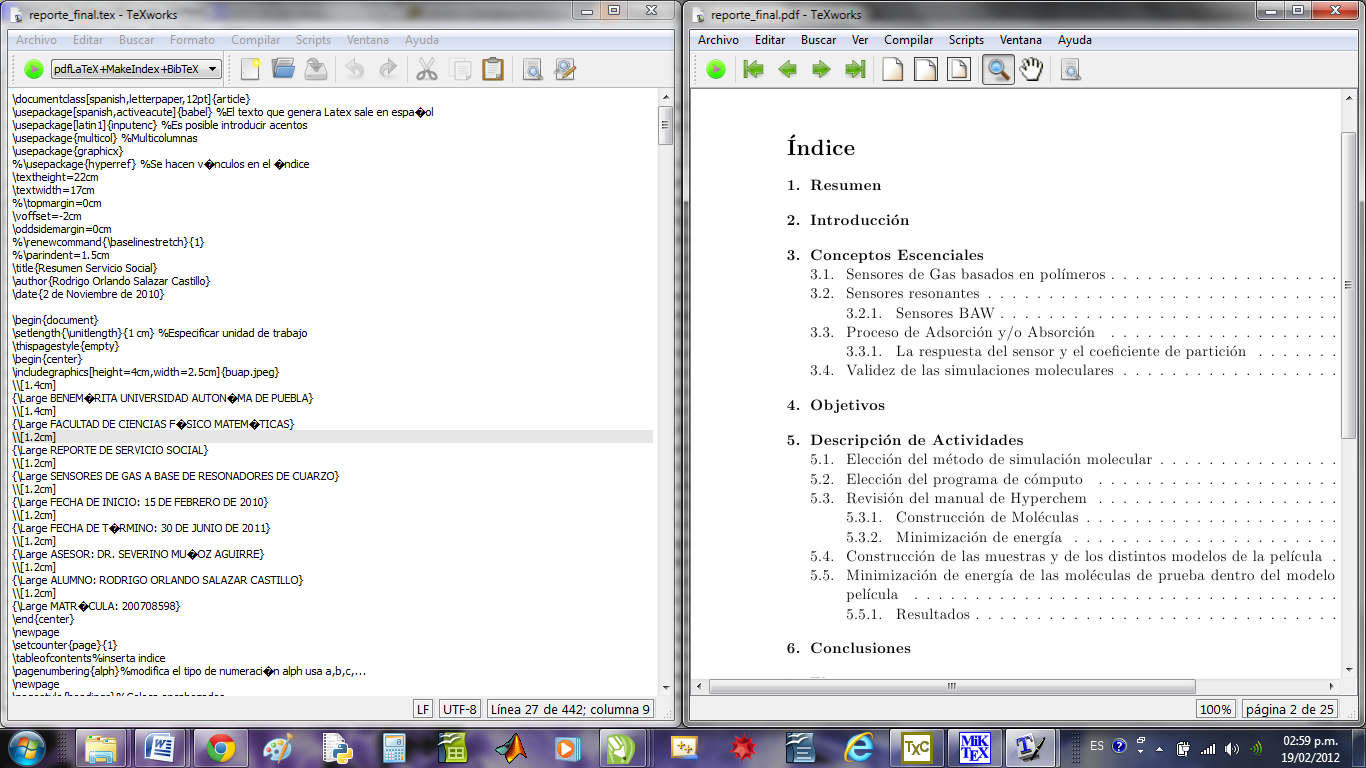

GET STARTED TEXWORKS TUTORIAL CODE

Versioning: it should be possible to go back in time to inspect everything relating to a given model, e.g., what data & code was used.should meet minimum performance on a test set - should perform well on synthetic use case-specific datasets Model unit testing: every time we create, change, or retrain a model, we should automatically validate the integrity of the model, e.g.We want the handover from ML training to deployment to be as smooth as possible, which is more likely the case for such a platform than ML models developed in different local environments. This platform should enable secure access to data sources (e.g., from data engineering workflows).

Development platform: a collaborative platform for performing ML experiments and empowering the creation of ML models by data scientists should be considered part of the MLOps framework.It is all about getting ML models into production but what does that mean? For this post, I will consider the following list of concepts, which I think should be considered as part of an MLOps framework: Plenty of information can be found online discussing the conceptual ins and outs of MLOps, so instead, this article will focus on being pragmatic with a lot of hands-on code, etc., basically setting up a proof of concept MLOps framework based on open-source tools.

GET STARTED TEXWORKS TUTORIAL SOFTWARE

Machine Learning Operations (MLOps) refers to an approach where a combination of DevOps and software engineering is leveraged in a manner that enables deploying and maintaining ML models in production reliably and efficiently. In this post, I’ll go over my personal thoughts (with implementation examples) on principles suitable for the journey of putting ML models into production within a regulated industry i.e., when everything needs to be auditable, compliant, and in control - a situation where a hacked together API deployed on an EC2 instance is not going to cut it. Depending on the level of ambition, it can be surprisingly hard, actually. People vector created by pch.vector - Getting machine learning (ML) models into production is hard work.

0 kommentar(er)

0 kommentar(er)